This is A group of random notes on language understanding mechanism in human brain. I will update this post when I find something new.

Some people like to consider the relationship between neuroscience and artificial intelligence. Others prefer working on cost functions and optimization techniques. Some inspirations in human brain may not help with convergence, but there is no harm to have some knowledge.

Background

So we’ll focus on how human brain understand language today. To get start, the review paper Friederici A. D. 2011 is a must-read. As it is a little long, here are something I found useful from the paper.

Language processing areas in the brain (a video on this topic and aphasia)

- Broca’s area

- Wernicke’s area

- Parts of the middle temporal gyrus (MTG) and the inferior parietal and angular gyrus in the parietal lobe

3 linguistic processing phases

- (Initial phase, does not count) Acoustic-phonological analysis (speech)

- Sentence-level processing (local phrase structure is built on the basis of word category information)

- Syntactic and semantic computation

- Integration of different information types (if 2 cannot get a compatible interpretation)

Semantic Processing in Human Brain

Probably most interesting parts to Natural Language Processing (NLP) folks are 2 and 3.

Tools

Basically there are two kinds of “tools” researchers are using to investigate the syntactic and semantic processing mechanism in human brain (See Haan M. D. 2002 for comparison).

Functional magnetic resonance imaging (fMRI): imaging brain activities (via changes in blood oxygenation). It has good spatial resolution (on the order of millimetres) but poorer temporal resolution. More details can be seen on Wikipedia and here.

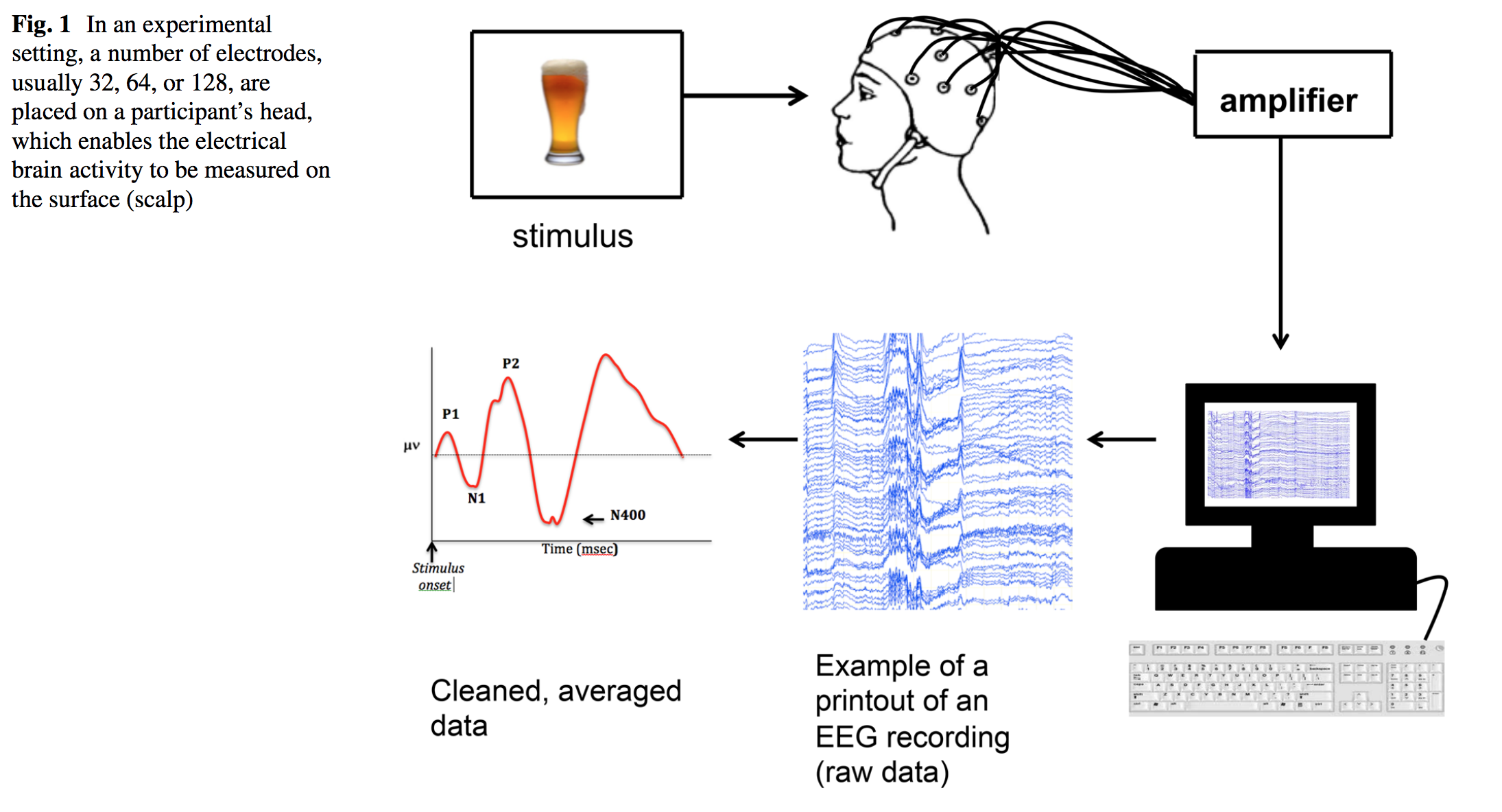

Event-related brain potential (ERP): potential generated by neuronal activity, measured by Electroencephalography (EEG). ERPs have excellent temporal resolution (on the order of milliseconds) but relatively poor spatial resolution. More details on Wikipedia. The experiment of getting ERPs looks like this:

|

Findings

Some More Details

Experiment Design

Empirically, there are three basic methodological approaches to investigate syntactic and semantic processes during sentence comprehension.

- Vary the presence/absence of syntactic information (by comparing sentences to word list) or of semantic information (by comparing real word lists/sentences to pseudoword lists/sentences)

- Introduce syntactic or semantic errors in sentences.

- vary the complexity of the syntactic structure (including syntactic ambiguities) or the difficulty of semantic interpretation (including semantic ambiguities).

Beyond Lexical Semantics

Researchers also investigated the integration of extralinguistic information into preceding context, such as pictures. Some study in pure visual domain. For instance, West W. C. 2002 presented experiment where the participant see a series of pictures forming a story and the last one was either congruous or incongruous with preceding ones. The study shows that incongruous pictures elicited increased N300 and N400 effects. For semantic fit of pictures in a sentence context, ERP studies showed similar N400 amplitudes as that of words. The difference is that N300 is also observed, which is an earlier separate negativity (for more information, Willems R. M. 2008 gives some summary).

References

[1] Haan, Michelle de, and Kathleen M. Thomas. “Applications of ERP and fMRI techniques to developmental science.” Developmental Science 5.3 (2002): 335-343.

[2] Beres, Anna M. “Time is of the Essence: A Review of Electroencephalography (EEG) and Event-Related Brain Potentials (ERPs) in Language Research.” Applied psychophysiology and biofeedback 42.4 (2017): 247-255.

[3] Friederici, Angela D. “The brain basis of language processing: from structure to function.” Physiological reviews 91.4 (2011): 1357-1392.

[4] West, W. Caroline, and Phillip J. Holcomb. “Event-related potentials during discourse-level semantic integration of complex pictures.” Cognitive Brain Research 13.3 (2002): 363-375.

[5] Willems, Roel M., Aslı Özyürek, and Peter Hagoort. “Seeing and hearing meaning: ERP and fMRI evidence of word versus picture integration into a sentence context.” Journal of Cognitive Neuroscience 20.7 (2008): 1235-1249.